The DataGrid in Telerik UI for Blazor’s OnStateInit and OnStateChanged lifecycle events give you almost complete control over every part of the grid. Learn how to use them in this tutorial.

The DataGrid in Telerik UI for Blazor’s lifecycle events makes it easy to both simplify and centralize functionality that it would otherwise require more complex code spread across multiple events to implement. Before implementing any row or button-specific functionality, you should always check to see if it would be easier to put that code in one of the grid’s lifecycle events (e.g. OnStateChanged, OnCreate, OnDelete, etc.).

The two most powerful of these lifecycle methods are the ones that let you manage your grid’s state: OnStateInit and OnStateChanged. These two methods give you the ability to both react to and change the grid’s state… which is, basically, everything about the grid: what filters are applied, what page is being displayed, which rows are selected, what groupings are in place, and more. There are two events so that you can manage the grid’s state as the grid is first loaded (OnStateInit) and as the user interacts with the grid (OnStateChanged).

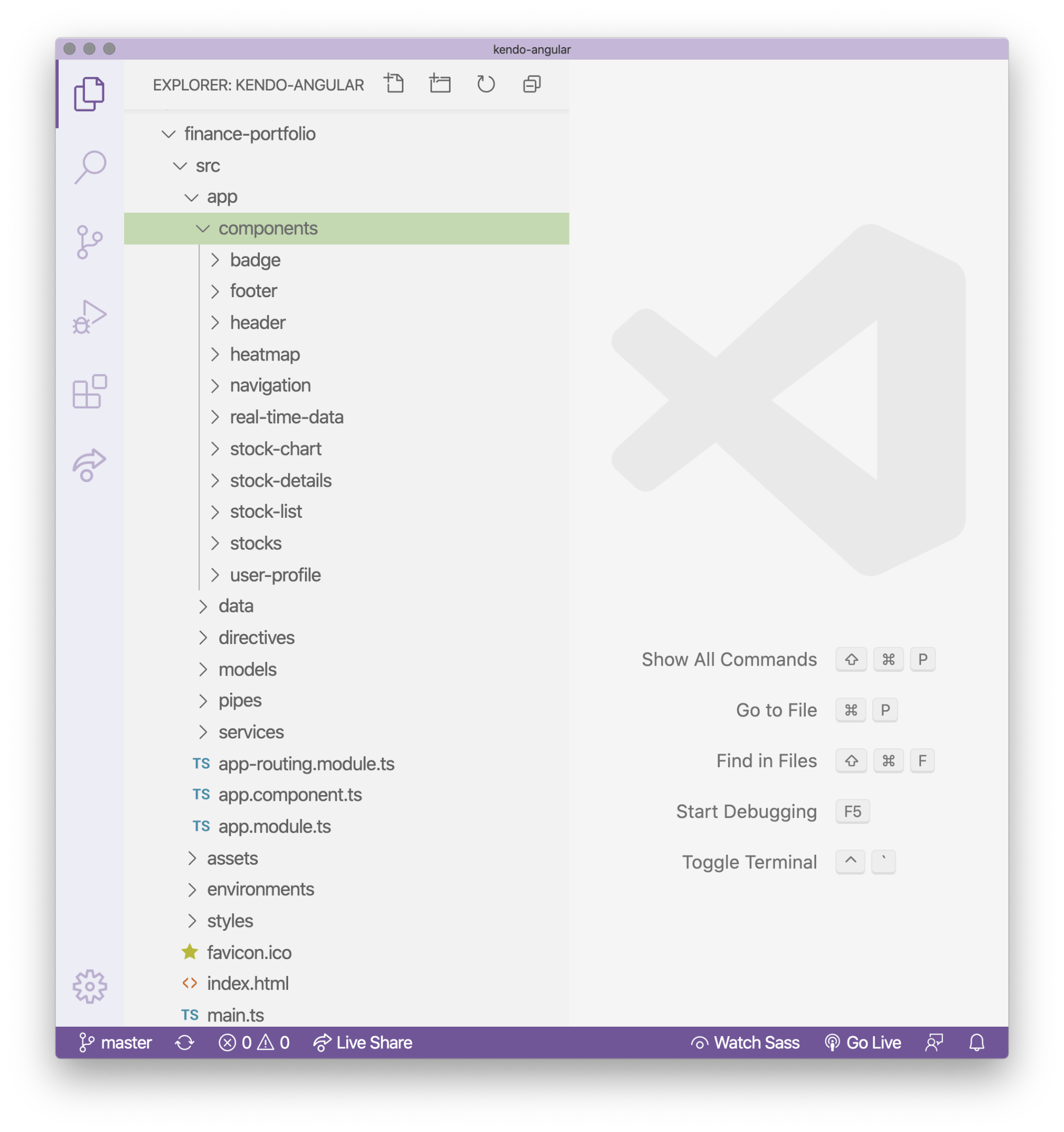

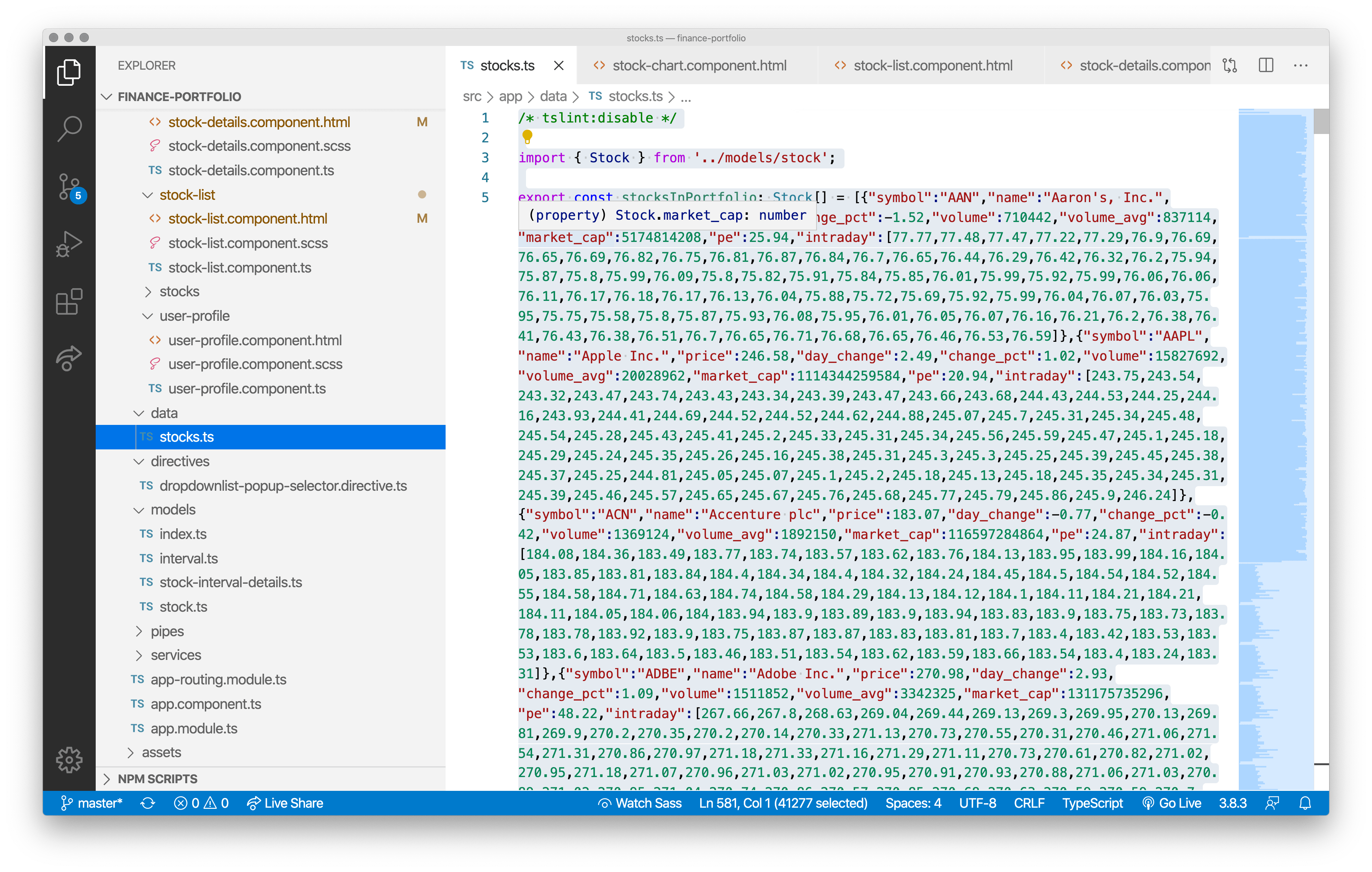

Some technical background (feel free to skip this paragraph): I created the project in this post using version 2.12.0 of the Telerik controls, Visual Studio 2019 preview (version 16.6.0 Preview 6.0), and ASP.NET Core 16.6.934/Razor Language Tools 16.1.0/WebAssembly 3.2.0. The base for my project is the Telerik C# Blazor Application template, using the Blank Client App template. I added a DataGrid to the Index.razor page included in the template. I prefer to keep my code and markup in separate files, so I added an Index.razor.cs class file to my Pages folder and marked it as a partial class. All the code you see in this post is from that C# file.

Controlling the Grid’s Initial Load: Markup

To demonstrate the power of manipulating the DataGrid’s state as it loads in the OnStateInit event, consider a scenario where this component that displays a list of employees is passed a specific employee. In this scenario, as the grid initializes, I’ll have the grid display the page that includes the requested employee and make that employee the currently selected row. When the component is displayed, the requested employee will both be on the screen and ready to be modified.

My initial markup for the DataGrid ties it to a field called MyData that holds the collection of Employee objects, turns on several features (paging, selection, and filtering), sets the page size to another field (cleverly called pageSize), and sets up a field called theGrid to let me refer to the grid from my code:

<TelerikGrid Data="@MyData" Height="400px"

Pageable="true" PageSize="@pageSize"

SelectionMode="@GridSelectionMode.Single"

FilterMode="Telerik.Blazor.GridFilterMode.FilterRow"

@ref="theGrid">The next step is to tie a method I’ve called GridInitializing to the grid’s OnStateInit event. To do that, and capture the GridStateEventArgs object generated by the event, I need to set the grid’s OnStateInit attribute to a lambda expression. That additional markup looks like this:

@ref="theGrid"

OnStateInit="(GridStateEventArgs<Employee> args) => GridInitializing(args)">

Controlling the Grid’s Initial Load: Code

With my markup taken care of, I can switch to my class file and write some code. First, I set up the fields that hold the data displayed by the grid (MyData), the page size (pageSize), and the field tied to my grid’s ref attribute (theGrid). I loaded some dummy data into MyData in my class’s constructor and I’ve omitted that here:

partial class Index

{

IEnumerable<Employee> MyData;

int pageSize = 10;

TelerikGrid<Employee> theGrid;

With all that in place, I can start managing the grid’s initial state in my GridInitializing method. I don’t want my method to slow down the grid’s initialization process any more than necessary so I’ve declared the method as async void. The skeleton of my GridIntializing method looks like this:

void GridInitializing(GridStateEventArgs<Employee> e)

{

}

There’s a lot that you can do in the OnStateInit event (for example, the documentation for this event shows how to load your grid’s state from local storage to support offline processing). However, as the documentation points out, this method is called as the grid is initializing so there also are some things that you can’t do in the event (at least, not right now).

For example, the grid has a GetState method that returns the grid’s complete state… but calling that method in the OnStateInit method will stop your grid from displaying its data. Fortunately, though, there’s an easier way to access the grid’s state than calling the GetState method: The parameter passed to your OnStateInit method includes a GridState property that gives you access to the grid’s state (though, even reading some properties on the GridState property in OnStateInit—the Width property on a ColumnState, for example—will also stop the grid from displaying its data).

In order to have the grid display and select a specific employee, I need to do three things:

- Find the matching employee in the collection displayed in the grid (I wrote a little helper method that returns both the matching object and its position in the collection)

- Set the grid to display the page that the employee appears on

- Make that row in the grid the currently selected row

And that takes just four lines of code in the OnStateInit event. First, I need to call my helper method to get the object and its position:

Employee sd;

int index = FindIndex(selectedName, out sd);

Next, I calculate the page number from the item’s position and use that to set the page to be displayed when the grid finishes initializing:

e.GridState.Page = (int) Math.Ceiling(((decimal)index / pageSize));

Finally, to make that object the currently selected employee, I add the object found by my helper method to a collection that I shove into the GridState’s SelectedItems collection:

e.GridState.SelectedItems = new List<Employee> { sd };

And I’m done: When the grid finishes initializing, the employee the user requested will be on the grid page, displayed to the user and already selected.

Responding to State Changes: Markup

While OnStateInit lets you control the grid’s initial state, OnStateChange lets you manage what happens as the user interacts with the grid. The grid takes a very broad view of what counts as a state change—it not only includes changing/adding/deleting objects in the grid but also changes to the shape of the grid (grouping) or the way the objects are displayed (sorting/filtering) and more.

While you can respond to all those changes in the OnStateChange event, in any particular application you’ll probably only care about a few of them. The issue here is that the OnStateChanged event method is called a lot so if you have too much going on in the event, it’s possible to impact the grid’s performance. You can address that issue by, first, making your event method run asynchronously and, second, by only executing any code when you need to.

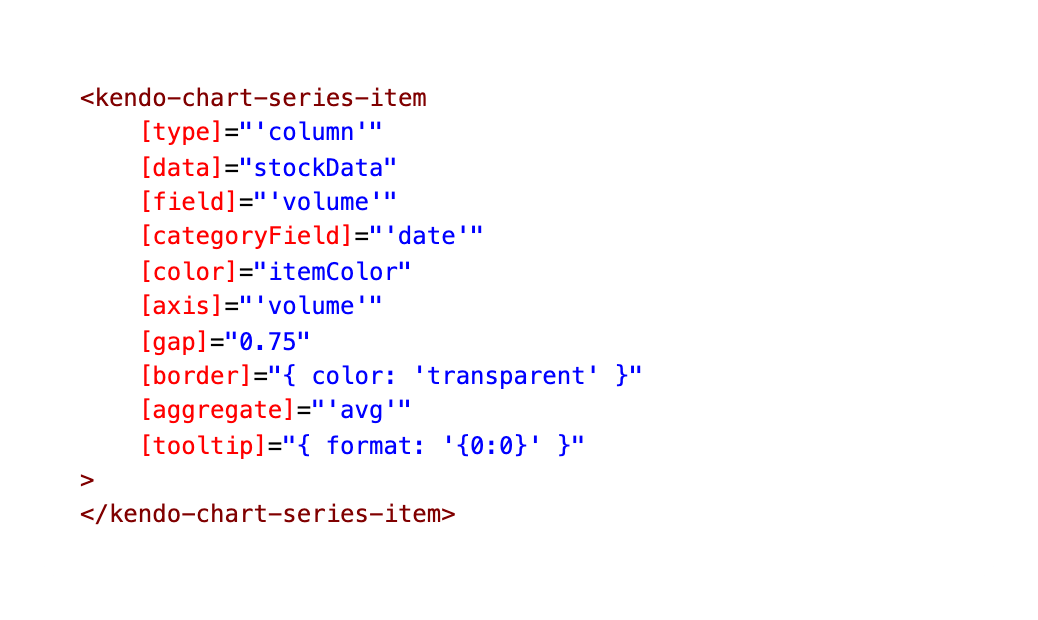

As an example of what you can do in OnStateChange, when the user selects an employee in the grid (a state change), I’ll automatically filter the grid to show only the employees in the same department as the selected employee. To support that, I need to add a GridCheckboxColumn to the grid to let the user select an employee and trigger the state change (selecting an employee also adds that selected employee to the grid’s SelectedItems collection).

Here’s the markup that adds that column along with some of the other columns in the grid:

<GridColumns>

<GridCheckboxColumn SelectAll="false" Title="Select" Width="70px" />

<GridColumn Field="@(nameof(Employee.Id))" Width="120px" />

<GridColumn Field="@(nameof(Employee.Department))" Title="Team" />

…rest of the columns…

My first step is to wire up a method to the grid’s OnStateChanged event. The syntax for this is very similar to what’s required in the OnStateInit event:

@ref="theGrid"

OnStateChanged="(GridStateEventArgs<Employee> args) => GridChanging(args)" />

Controlling the Grid’s Initial Load: Code

Those similarities extend to the skeleton for the method, which accepts the same GridStateEventArgs parameter as the OnStateInit event handler. I’ve marked the method as asynchronous:

async void GridChanging(GridStateEventArgs<Employee> e)

{

}

Next, I’ll check whether I need to do anything at all. The OnStateChanged event is raised a couple of times before the grid is fully initialized so I first see if the grid is ready to be used by checking the field referencing the grid: If the field’s not null, the grid is ready.

My second step is to check whether the state change is one that I’m interested in. The PropertyName property on the parameter passed to the method will tell you what part of the grid’s state has triggered OnStateChanged. In my case, I want to take action when PropertyName is set to “SelectedItems.”

Finally, I check for any conditions relevant to your action. In my case, the SelectedItems state will change both if an Employee object is added to the SelectedItems collection and if an Employee object is removed. I only want to do something if an Employee is present in the collection, so I check the SelectedItems count.

As a result, the first thing I do inside of my method is make sure all of those condition are met before I do anything. That code looks like this:

if (theGrid != null &&

e.PropertyName == "SelectedItems" &&

e.GridState.SelectedItems.Count > 0)

{

}

My next step is to grab the selected Employee object in the GridState’s SelectedItems collection:

{

Employee sd = e.GridState.SelectedItems.First();

Modifying the grid’s state is pretty straightforward: I create a new GridState object, modify the parts of the state that I’m interested in, and then merge my modified GridState into the grid’s existing state using the DataGrid’s SetState method.

Here’s the code that creates a GridState object (called filteredState) and then adds a FilterDescriptor that limits the displayed rows to ones in the same department as the currently selected Employee object:

GridState<Employee> filteredState = new GridState<Employee>();

filteredState.FilterDescriptors = new List<FilterDescriptorBase>()

{

new FilterDescriptor() { Member = "Department",

Operator = FilterOperator.IsEqualTo,

Value = sd.Department,

MemberType = typeof(string)

}

};

To trigger filtering, I just need to merge this modified state into the grid’s state, using SetState. The SetState method is awaitable so, to make sure my grid remains responsive, I use SetState with the await keyword to have it run asynchronously, like this:

await theGrid.SetState(filteredState);

While there are additional lifecycle methods associated with the DataGrid (OnUpdate, OnCreate, etc.) with in the OnStateInit and OnStateChanged events you have the ability to go beyond the handling the data in the grid to manage virtually every other part of it.

When this completes successfully, run the command

When this completes successfully, run the command